AI tools are making it faster and easier for therapists to complete back office tasks so they can focus more time and energy on their clients. But when it comes to handling sensitive information—like session notes, emails, and appointment times—AI has the potential to compromise clients’ privacy.

Before you start using AI in your therapy practice, here is what you need to know to ensure you remain HIPAA-compliant.

How does AI violate HIPAA rules?

This article in the HIPAA Journal gives a detailed breakdown of the ways AI may compromise clients’ privacy and violate HIPAA rules.

The most important takeaway is that, when you provide AI with protected health information (PHI), you usually do not have control over how that information is used. Once it leaves your hands, PHI may be used in ways that violate HIPAA:

- Employees at the company that hosts the AI may be able to access PHI

- The AI or its parent company may use data beyond the minimum amount necessary to complete its task

- The PHI may be mishandled in a way that allows it to be accessed by outside parties

- Clients’ PHI may be used outside the scope of the HIPAA Privacy Rule

The HIPAA Privacy Rule specifies how PHI may be used without prior authorization from its owner (the client). That includes treatment, payment, and healthcare operations (TPO) and disclosure to law enforcement. It does not include marketing, research, or other activities outside the essential day-to-day operation of your therapy practice.

Many AI tools use input from users to train AI. Training AI falls outside the scope of PHI uses that do not require patient authorization. So, unless your client has consented to have their PHI used to train AI, you violate HIPAA by giving it to an AI for training purposes.

How do therapists violate HIPAA by using AI?

You violate HIPAA rules when you use non-HIPAA-compliant AI tools to handle PHI.

That includes using AI tools to:

- Transcribe sessions with clients

- Generate session notes

- Compose letters or emails to specific (named) clients

- Analyze, summarize, or compile client treatment histories or medical records

- Generate contracts or forms in which specific clients’ names or addresses are included

- Schedule sessions or create calendar events where clients’ names are used

- Compile or reformat clients’ personal information (eg. creating a contact list for all of your clients)

- Answer any questions during chatbot a session where you use client names or personal details

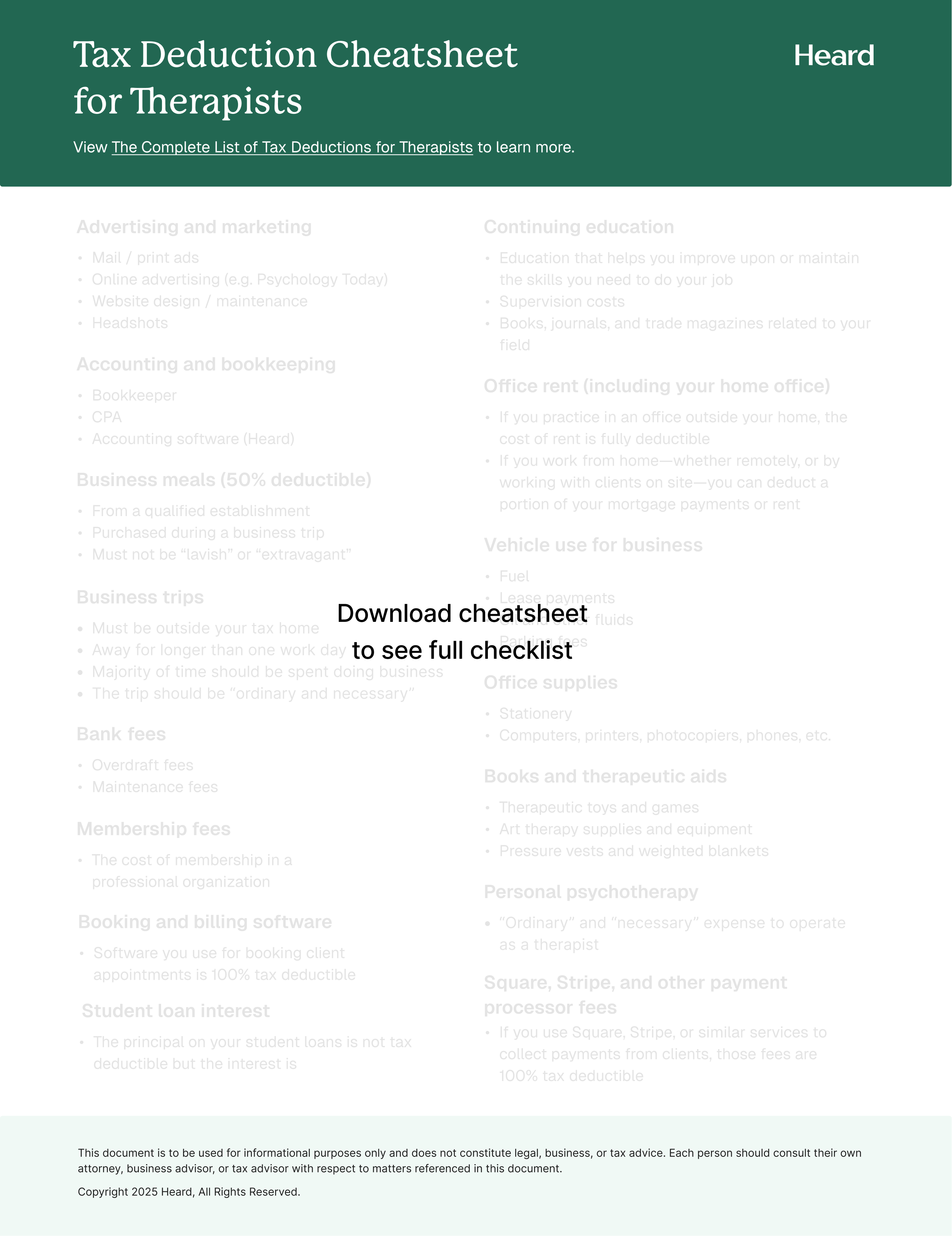

{{resource}}

Is ChatGPT HIPAA-compliant?

ChatGPT is not HIPAA-compliant. Because OpenAI (the company that owns ChatGPT) uses user input to train its AI, any PHI you provide ChatGTP may be used outside the scope of HIPAA rules for unauthorized use.

Keep in mind that many AI tools use ChatGPT to run their backends. For instance, you might use an AI transcription tool to create a transcript of a client session. On the surface, you use the tool’s interface to generate the transcript, but in the background the information is being shared with ChatGPT. It’s important before using any AI tool to make sure that it is HIPAA-compliant and will not share your input with non-HIPAA-compliant AI.

What are some HIPAA-compliant ChatGPT alternatives?

BastionGPT and CompliantChatGPT are both HIPAA-compliant AI tools that offer similar functionality to ChatGPT, along with features tailored to medical professionals.

When you enter PHI into either BastionGPT or CompliantChatGPT, the information is anonymized before being submitted to ChatGPT. All identifying information is removed, so that AI can process it without ever coming into contact with client data.

How can I be sure AI is HIPAA-compliant?

Many AI tools now on the market offer HIPAA-compliant solutions to therapists and medical professionals. But the only way to be certain that a particular tool is HIPAA-compliant is if the company signs a business associate agreement (BAA) with its users.

Any tool you use to handle clients’ PHI must sign a BAA with you. Signing a BAA is standard when you hire a third party to handle PHI. For instance, when you purchase electronic health record (EHR) software for your practice, you sign a BAA with the company providing the software. The BAA guarantees that PHI will be handled in compliance with HIPAA rules.

If a company won’t provide you with a BAA, steer clear of them—any PHI you input into their AI tool is de facto compromised.

—

Learn more about How AI Will Impact Therapists.

This post is to be used for informational purposes only and does not constitute legal, business, or tax advice. Each person should consult their own attorney, business advisor, or tax advisor with respect to matters referenced in this post.

Bryce Warnes is a West Coast writer specializing in small business finances.

{{cta}}

Manage your bookkeeping, taxes, and payroll—all in one place.

Discover more. Get our newsletter.

Get free articles, guides, and tools developed by our experts to help you understand and manage your private practice finances.